Developing Ethical Frameworks for Internal AI Adoption and Governance

Let’s be honest. AI isn’t a future concept anymore—it’s in the trenches. It’s screening resumes, predicting inventory, drafting emails, and analyzing customer sentiment. The rush to adopt is palpable, a mix of genuine excitement and pure fear of falling behind.

But here’s the deal. Moving fast and breaking things doesn’t work when the “things” might be privacy, fairness, or trust. That’s where a robust, living ethical framework comes in. It’s not a buzzkill; it’s your guardrails on a winding mountain road. They don’t slow you down—they let you drive with confidence.

Why “Ethics by Design” Isn’t Just a Nice-to-Have

Think of it this way. You wouldn’t build a new factory without safety protocols. You wouldn’t launch a product without quality checks. AI systems, frankly, are more complex and impactful than both. An internal AI governance framework is your operational safety protocol. It moves ethics from a theoretical discussion in the boardroom to a practical checklist in the developer’s workflow.

Without it, you’re flying blind. The pain points are real: algorithmic bias leading to discriminatory hiring, opaque decision-making that erodes employee trust, data pipelines that accidentally violate consent. A framework turns reactive panic into proactive governance.

Core Pillars of an Internal AI Ethics Framework

Okay, so what’s in this thing? It’s not a one-page manifesto. It’s a multi-layered approach that touches culture, process, and technology. Let’s break it down.

1. Accountability & Human Oversight (The “Who’s in Charge?” Principle)

First rule: AI should augment humans, not replace judgment. Your framework must clearly define human-in-the-loop checkpoints. Who is ultimately responsible for the AI’s output? Is it the data scientist? The product manager? The legal team?

Assign an AI ethics officer or a cross-functional governance committee. Their job isn’t to say “no” to everything, but to ask the hard questions early. “Can we explain this decision to an affected employee?” “What’s our fallback plan if the model fails?”

2. Fairness & Bias Mitigation

This is the big one. AI models learn from historical data. And, well, our history is messy. An ethical AI adoption strategy mandates bias audits at multiple stages.

It means looking for demographic skews in your training data. It means testing outputs across different user groups. It’s not a one-time fix; it’s continuous monitoring. Because a model that works fairly today might drift tomorrow.

3. Transparency & Explainability

If an AI makes a decision that affects someone’s career, loan, or workload, “the algorithm decided” is not an acceptable explanation. Your framework needs to define levels of explainability based on impact.

High-impact decisions (like promotions or firings) require high clarity—maybe even a simple, plain-language reason. Lower-impact ones might just need clear documentation of the model’s purpose and data sources. The goal is to demystify, to build trust through openness.

4. Privacy & Data Stewardship

AI is hungry for data. Your framework is its diet plan. It must enforce data minimization: only use what’s strictly necessary. It must ensure informed consent for personal data, and it must mandate robust security to protect that data throughout the AI lifecycle. This is where responsible AI governance meets GDPR and other regulations head-on.

Building Your Framework: A Practical, Staggered Approach

Don’t try to boil the ocean. Start with a pilot. Here’s a possible path forward.

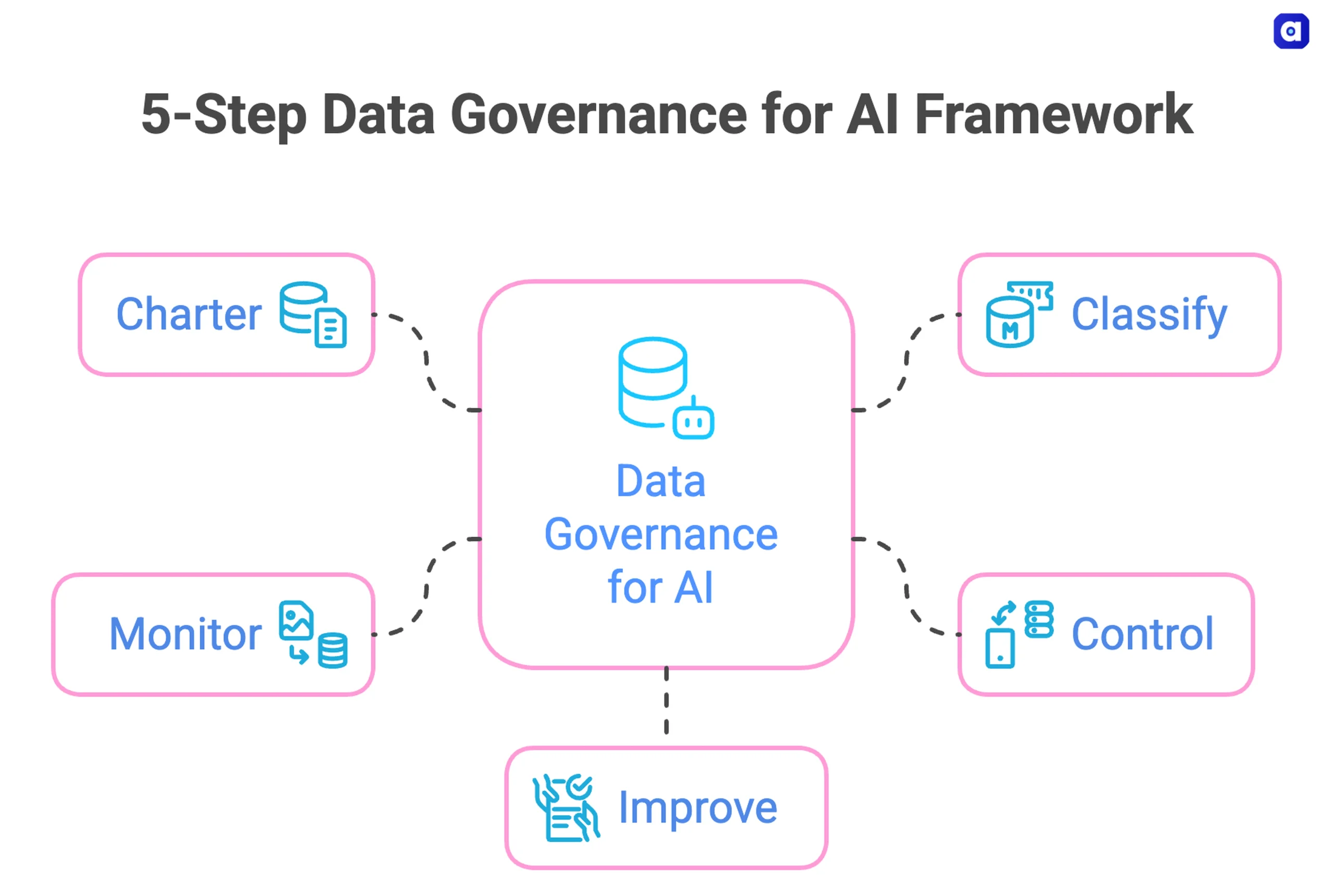

- Phase 1: Assess & Educate. Take inventory of all AI/ML tools already in use (you’ll find more than you think). Run basic ethics workshops for leaders and tech teams. Create a draft charter.

- Phase 2: Pilot & Protocol. Apply your draft framework to one new, medium-risk AI project. A customer service chatbot, maybe. Document every hurdle, every question. Create simple templates—like an AI impact assessment questionnaire.

- Phase 3: Scale & Institutionalize. Integrate the assessment questionnaire into your standard project kickoff process. Establish your review committee. Make ethics a required line item in budgets and timelines.

Honestly, the tool you use to track this matters less than the habit you build. A shared spreadsheet can work better than a fancy, unused platform.

Operationalizing Governance: The Daily Grind

A framework in a PDF is dead. It needs to live in the daily workflow. That means checklists, clear roles, and regular reviews.

| Stage | Key Governance Question | Responsible Party |

| Problem Scoping | “Is AI the right solution here? What are the ethical risks?” | Product Lead + Ethics Officer |

| Data Sourcing | “Do we have rightful use? Is this data biased?” | Data Scientist + Legal |

| Model Development | “Can we explain its decisions? Have we tested for fairness?” | ML Engineer |

| Deployment & Monitoring | “Is it performing as intended? Is drift causing ethical harm?” | Ops Team + Governance Committee |

See? It’s about baking the questions into the process, not adding a huge extra burden at the end.

The Human Culture Shift

All this process talk is crucial, but the hardest part is cultural. You need to foster psychological safety where employees can actually raise red flags without fear. Celebrate the team that pauses a launch to fix a bias issue. That’s a win, not a delay.

Train people. Not just engineers, but HR, marketing, operations. Everyone should know the basic principles—your ethical AI principles—like they know the company mission statement.

The Tangible Benefits of Getting This Right

This isn’t just about avoiding disaster (though that’s a pretty good reason). It’s about building something durable. A strong internal AI governance framework attracts top talent who care about responsible tech. It builds fierce customer and employee trust. It future-proofs you against regulatory shocks.

In fact, it becomes a competitive moat. When your competitors are facing scandals and fines, your ethical groundwork lets you innovate—responsibly, and with confidence.

So, where does that leave us? The path to ethical AI isn’t a straight line. It’s iterative, sometimes messy, and demands constant vigilance. It asks us to be humble about the technology’s limits and ambitious about our own human responsibility.

The goal isn’t a perfect, faultless system. That’s a fantasy. The goal is a thoughtful, accountable organization that can harness this incredible tool without losing its soul in the process. That’s the real framework—not of rules, but of integrity.